Choose A Portfolio Category

Enterprise Level Products

Flite Golf

I served as Director of Unity Development at Flite Golf, leading the creation of a modular, white-label golf entertainment platform that powered full TopGolf-style venues for multiple brands. Our Unity-based system needed to adapt to each client’s branding, UI, and gameplay while staying stable enough to run across dozens of bays in a live venue. By designing the architecture around configuration-driven theming and feature toggles, we could spin up new sites and tailor experiences for different markets without rebuilding core systems, which helped increase customer retention by around 40 percent across partner venues.

On the technical side, I led the implementation of robust networking for real-time, multi-bay multiplayer that remained responsive under heavy load. Through careful optimization and architecture improvements, we improved multiplayer performance by roughly 25 percent, which directly impacted how smooth and “premium” the experience felt to guests. I also streamlined project planning and budgeting, shifting the team to predictable, milestone-driven delivery that consistently landed projects about 10 percent under budget and ahead of schedule.

Equally important was building the team itself. I personally interviewed, hired, trained, and mentored every member of the Unity development team, established clear development workflows, and introduced tools and practices that increased productivity by roughly 75 percent. The result was a small, high-performing group that could handle everything from new game modes and UI rebrands to venue-specific customizations without sacrificing code quality or maintainability.

Scope of Work:

- Directed Unity development for a scalable, white-label golf entertainment platform used in TopGolf-like venues.

- Designed a modular architecture for branding, UI, and gameplay that could be quickly reconfigured per client.

- Led implementation and optimization of networking for real-time, multi-bay multiplayer experiences.

- Streamlined project planning, estimation, and budgeting to deliver projects under budget and ahead of schedule.

- Established development workflows, tooling, and coding standards that significantly boosted team productivity.

- Interviewed, hired, onboarded, and mentored all Unity developers, building a cohesive, high-performing team.

https://www.centurygolf.com/copy-of-golf-entertainment

Roche Training

I architected and led development of an enterprise VR training platform for Roche, delivering an immersive way for scientists and technicians to learn complex lab procedures without flying to training centers or shipping expensive equipment. Built in Unity, the system lets trainees interact with accurate virtual replicas of Roche hardware and workflows, practice step by step in a safe environment, and receive real-time feedback on their performance before ever touching real lab equipment.

To support Roche and other clients like VR Learning Time, I designed a modular lesson framework built around ScriptableObjects so that content creators and subject matter experts could author and update training scenarios without changing code. Each lesson is defined by data that is injected into a shared set of core systems for interaction, progression, assessment, and analytics. When we add a new feature for one lesson, it becomes instantly available to every existing and future lesson, which dramatically reduces development overhead and keeps behavior consistent across the platform.

I also led the engineering team using Agile methodology, running sprint planning, backlog grooming, code reviews, and regular releases to keep quality high and delivery predictable. This combination of reusable systems, data-driven design, and disciplined process allowed us to roll out new enterprise-grade VR training modules quickly while maintaining a robust, scalable codebase.

Scope of Work:

- Architected an enterprise VR training platform for Roche using Unity.

- Designed a reusable, ScriptableObject-driven lesson framework for multiple clients and training scenarios.

- Implemented core systems for interaction, step-by-step progression, assessments, and analytics.

- Enabled non-programmers to build and update lesson content safely through data-driven tools and workflows.

- Led the engineering team with Agile practices (sprint planning, backlog management, code reviews, and releases).

- Optimized for maintainability and scalability so new features automatically extended to all existing lesson plans.

DimeTime:

NFL VR Training

At Radd3 I worked on a suite of HTC Vive training products that gave professional athletes a way to take high quality mental reps in Virtual Reality without adding physical wear and tear. The flagship experience was an NFL training tool where coaches could import offensive and defensive plays, assign matchups, and drop players into fully immersive game situations with crowd noise, pressure, and distractions. Players ran through their reads and responsibilities from a first person view, while coaches collected detailed session data that would be impossible to capture in a standard practice.

I contributed heavily to both the core VR experience and the Coaches Companion application that ran on Windows Surface tablets. On the VR side I implemented the UI systems that players used to navigate sessions and drills, built the audio system that handled crowd, coaching, and on field soundscapes, and created the wide receiver animation blending so catches could happen at variable, local positions instead of fixed keyframes. For the Coaches Companion, I implemented a responsive UI that made it easy to configure plays, schedule sessions, and review metrics from the sideline or meeting room, and I wired the client into the backend services using UnityWebRequest for authentication and data exchange.

During my time at Radd3 I also solo developed Matt Williams’ VR Batting Practice, another HTC Vive experience that placed players at home plate in a major league stadium. The simulator supported multiple pitch types like sliders, curveballs, sinkers, knuckleballs, change ups, and fastballs, and at the end of each session it presented a clear, visual breakdown of contact quality and outcomes to help players refine their swing. Both products combined realism, feedback, and repetition to give pros meaningful practice time in VR.

Scope of Work:

- Developed an HTC Vive NFL training platform that let players take immersive mental reps on imported offensive and defensive plays.

- Built VR UI systems for session flow, play selection, and in experience interaction.

Implemented the audio system for crowd, on field, and coaching feedback to simulate game day pressure.

- Created wide receiver animation blending so catch animations could adjust to different ball locations around the player.

- Developed the Coaches Companion application targeting Windows Surface tablets with a responsive, touch friendly UI.

- Integrated client to backend communication using UnityWebRequest for authentication, session data, and analytics.

- Solo designed and developed Matt Williams’ VR Batting Practice for HTC Vive, including pitch logic, hitting feedback, and post session performance UI.

360° VR-Optional

Live Broadcasting

I helped build Immersive Media’s 360 degree, VR optional live broadcasting platform that was used by major broadcasters during the Rio 2016 Olympics, letting viewers in the US and UK experience events as fully navigable 360 video and VR instead of traditional flat feeds. This work took me from casual game development into true enterprise grade software, powering a global audience for one of the first large scale Olympic VR and 360 broadcast offerings.

My primary responsibility was the application layer. I designed and implemented the UI that viewers used to navigate live feeds and camera angles, as well as the internal control interfaces that the broadcast team used to decide what viewers would see and when. On the rendering side, I wrote a custom shader that allowed certain input feeds to render with transparency, and added cropping controls so production staff could frame and composite multiple 360 and flat video windows in real time inside the broadcasting tool. All of this had to run reliably on tight broadcast timelines, sync with live video pipelines, and stay flexible enough for evolving production needs, right up through the Olympics and the eventual acquisition of Immersive Media by Digital Domain in 2016.

Scope of Work:

- Contributed to a 360 degree, VR optional live broadcasting platform used for Rio 2016 Olympic coverage.

- Built viewer facing UI for navigating live 360 streams, camera angles, and viewing modes.

- Built internal control room UI for producers to control which feeds, angles, and overlays were sent to viewers in real time.

- Implemented custom shaders to support transparency and compositing of multiple live input feeds.

- Added cropping and layout tools so operators could adjust playback windows dynamically during broadcasts.

- Collaborated with the broader engineering and production teams to ensure the system met broadcast reliability standards for a global, high stakes live event.

Dormakaba Interactive Exhibit

I designed and developed the Dormakaba Interactive Exhibit, an augmented reality experience that let customers explore Dormakaba’s access solutions in a hands on, immersive way. Instead of relying on static brochures or hardware bolted to a wall, visitors could use a tablet to bring 3D product models to life, view them in context, and step through guided demos that explained how each solution worked in real environments like offices, hotels, and secure facilities.

Under the hood, the exhibit was built in Unity with a modular, data driven content pipeline so new products, scenes, and languages could be added without rewriting code. Product teams could update visuals and messaging while the core AR interaction, animation, and UI systems stayed the same. The result was a portable, reusable exhibit that Dormakaba could take to events, showrooms, and client demos, giving sales and marketing teams a consistent, memorable way to showcase complex hardware and systems.

Scope of Work:

- Architected and implemented a Unity based AR exhibit for Dormakaba product demonstrations.

Built interactive 3D product visualizations with hotspots, animations, and step by step narratives.

- Designed a data driven content system so new products and configurations could be added without code changes.

- Created an intuitive UX for both guided sales presentations and self directed exploration.

- Optimized performance for mobile devices handling detailed 3D models in event environments.

- Collaborated with Dormakaba stakeholders to translate product features into clear, interactive stories suitable for trade shows and customer demos.

Rescan 360

I implemented the AR and VR viewing modes for Rescan 360’s commercial real estate platform, giving clients a radically new way to explore large properties digitally. Using Unity, I built an experience where users could step into fully reconstructed buildings, either on a mobile device in AR or in an immersive VR session, and move through hallways, rooms, and key areas as if they were physically on site. Instead of static photos or traditional 360 tours, they could navigate rich 3D spaces that came directly from Rescan 360’s head-worn scanning system, which captured the geometry of each building as technicians walked through it.

My work focused on the client side of that pipeline. I integrated the scanned environment data into a flexible Unity scene loader that respected user permissions, so different roles only saw the spaces and tools they were authorized for. On top of that, I designed and implemented a mobile responsive UI that worked cleanly on both Android and iOS, with controls tuned for handheld AR walkthroughs as well as VR-style navigation and inspection. The result was a unified viewing application that showcased the full power of Rescan 360’s hardware and data pipeline and contributed to the hardware-and-software solution receiving a Red Dot design award in the “Best of the Best” category in 2021.

Scope of Work:

- Implemented AR and VR viewing options in Unity so users could explore commercial real estate scans on mobile and in immersive modes.

- Built a mobile responsive UI system for Android and iOS, including navigation, inspection, and mode switching.

- Integrated head-mounted scanner output into the client app, loading building geometry based on user permissions and role.

- Created flexible scene loading and viewing flows so new scans and sites could be added without major code changes.

- Collaborated with the engineering team to optimize performance and UX across devices and refine the experience into a polished client-ready tool.

Web3 Products

Owner-Governed Asset Ledger

I designed, developed, and deployed the Owner-Governed Asset Ledger (OGAL), an open source, public good protocol on Solana that acts as a shared registry for user generated content across multiple games and studios. OGAL gives creators verifiable on-chain ownership of their assets under a single canonical program, while enforcing royalty rules, collection alignment, and governance without each team needing to deploy their own custom contract. It currently backs the live Token Toss UGC pipeline on Solana mainnet, where every player-built level is minted and updated through OGAL.

At the protocol level, OGAL organizes content into namespaces, each controlled by a specific authority wallet. The program derives deterministic PDAs for configuration, mint authority, and collections, then validates these invariants on every mint and manifest update. This lets studios rotate authorities, pause minting, or migrate to new namespaces without redeploying the program or losing their audit trail. Each object minted through OGAL stores only a URI and content hash, so teams are free to use whatever manifest format best fits their experience, from JSON level layouts to GLB bundles or other custom asset packs.

Scope of Work:

- Protocol and on-chain architecture for OGAL, including namespaces, PDAs, authority rotation, and mint guard rails.

- Rust/Anchor implementation of the Solana program and deployment to Solana mainnet-beta.

- Design of the manifest model that stores only URIs and hashes, allowing arbitrary off-chain formats for UGC.

- Node.js CLI tools for namespace initialization, minting, updating manifests, pausing/unpausing, and authority management.

- Unity integration patterns via the Solana Toolbelt for Unity, including configuration assets and transaction helpers.

- Documentation and operational runbooks for onboarding external teams, debugging failed transactions, and maintaining production namespaces.

Token Toss

I solo-built Token Toss end-to-end: a UGC mobile game on Solana powered by the OGAL Protocol (custom Solana program) and the Solana Toolbelt for Unity (built on top of the Solana Unity SDK). The game introduces a new primitive for games (a global, open UGC asset layer), where creators verifiably own their assets (by wallet) and can update them, while anyone can freely read and use those assets across experiences.

In Token Toss, players design levels in-game and publish them on-chain via OGAL; the creator pays only at publish time. Any player can then load that level by its mint address with zero additional blockchain transactions. This removes platform lock-in, preserves asset utility even if a single title sunsets, and lets creators keep more of what they earn versus 30%–75% platform cuts.

Scope of Work:

- Product, client, and on-chain architecture from concept to alpha.

- Unity client with in-game level editor and mint flow.

- OGAL Solana program for ownership, update authority, and open reads.

- Solana Toolbelt for Unity to make wallet, mint, and read operations seamless.

Review the git repo at https://github.com/Ghiblify-Games/OGAL-And-Token-Toss

Download the free Android game at https://www.ghiblifygames.com

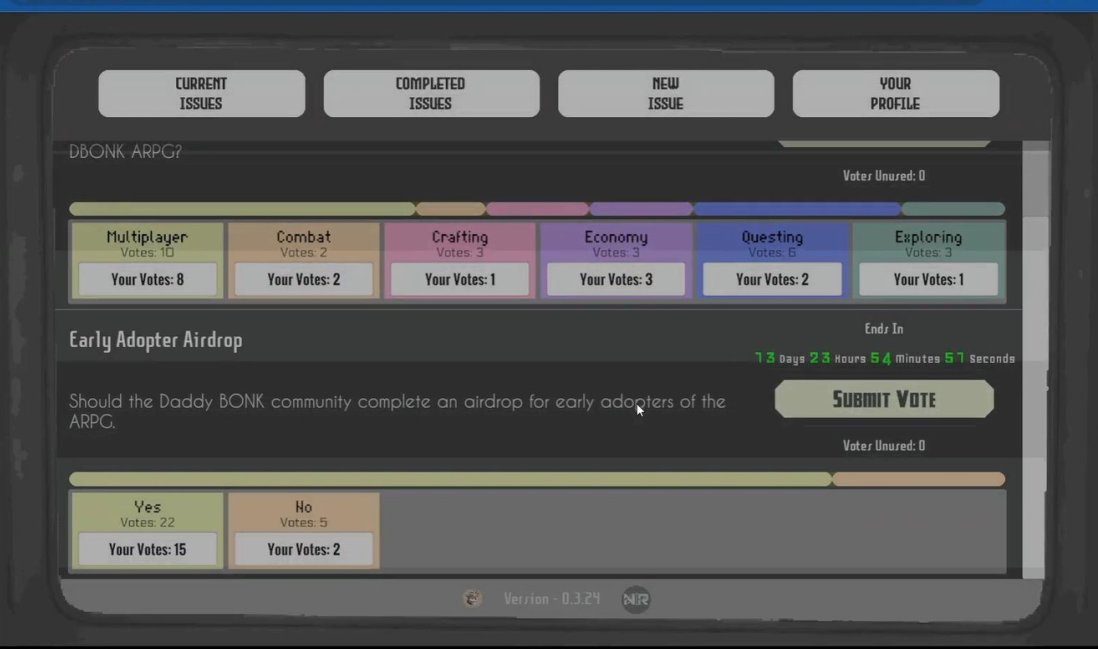

Web3 Quadratic Voting

I led the design and development of the NanoRes Digital Collectible Mint Launchpad, a first-of-its-kind WebGL experience built in Unity on Solana. Instead of a static mint page, it gives collectors a playful, interactive way to connect their wallets, explore the collection, and mint in real time, all framed as a branded, mini-game-like experience that teams can ship directly in the browser.

Behind the scenes, the Launchpad plugs into our in-house DC3 pipeline and Solana Toolbelt for Unity so collections can be configured without writing code. Teams define traits, rarity, and metadata, set their mint phases and pricing, then the Launchpad handles wallet connection, on-chain minting, live supply updates, and error handling. The front end is fully skinnable so partners can reskin the experience to their own IP while we keep the underlying mint logic, performance tuning, and blockchain integration consistent and reliable.

Scope of Work:

- Product and UX design for an interactive, game-like NFT mint experience in WebGL.

- Unity WebGL client implementation with wallet connect, mint flow, and real-time transaction feedback.

- Integration with the NanoRes DC3 pipeline for asset layers, rarity configuration, and metadata generation.

- Solana integration using the Solana Toolbelt for Unity to simplify wallet, mint, and read operations.

Theming system that lets partner teams rebrand the Launchpad without touching core code.

- Performance and build pipeline optimization for smooth WebGL delivery across desktop and mobile browsers.

DC3

See more videos at https://youtube.com/playlist?list=PLgB_fPUHIuMkMAwyphRYpNur0zHmmykKd&si=uPkaB2FqhspcJmP4

I designed and developed the NanoRes Digital Collectible Collection Creator (DC3), a no code creation suite that lets anyone build and launch their own digital collectible collections without needing blockchain or engineering experience. DC3 guides users through importing art layers, defining traits and rarities, previewing combinations, and generating full collections with clean, standards compliant metadata. The application handles all the heavy lifting around trait math, asset combinations, and metadata generation, so creators can stay focused on art direction and collection design instead of scripts and JSON files.

Under the hood, DC3 is built as a modular, multi platform tool that speaks directly to Solana today, with a roadmap for additional chains. It outputs ready to mint collections, complete with images and metadata, and can optionally plug into the NanoRes Reconstructor Unity SDK so those collectibles are usable in real time 3D experiences. This means a creator can go from layered PSD or PNG art to a live collection that is ready for games, galleries, or other interactive experiences, using one consistent pipeline. The goal is to make professional grade digital collectibles accessible to independent artists, studios, and brands without requiring them to staff a blockchain engineering team.

Scope of Work:

- Designed the overall architecture and UX for DC3, a no code digital collectible collection creator.

- Implemented asset import workflows for layered artwork, traits, and rarity definitions.

- Built the combination and metadata engine that generates full collections and associated metadata files.

- Added real time previews for rarity distribution and sample collectibles to help creators validate collections before export.

- Implemented export pipelines for Solana compatible collections, with a design that can extend to additional blockchains.

- Integrated optional hooks for the NanoRes Reconstructor Unity SDK so generated collectibles can be reconstructed inside Unity projects.

- Focused on non technical usability, including guided flows, validation, and guardrails that prevent common mistakes while still supporting advanced configurations.

Solana Toolbelt for Unity

I designed, developed, and packaged the NanoRes Solana Toolbelt, a Unity SDK that makes it straightforward for game teams to add Solana wallet connectivity, NFT minting, and OGAL-powered UGC flows to their projects. Instead of every team wiring Solana.Unity-SDK from scratch, the Toolbelt ships as a drop in Unity package with runtime libraries, prefabs, wallet adapters, and OGAL services that can be installed directly through the Unity Package Manager or as an Asset Store package.

Under the hood, the Toolbelt wraps the Solana Unity SDK and organizes everything into a clean UPM layout: runtime assemblies, Android templates and native plugins, shared UI resources, and a dedicated Toolbelt namespace for managers and utility scripts. Editor tooling provides setup wizards and inspectors that guide developers through configuration, so they can connect wallets, select RPC endpoints, and hook up OGAL namespaces without digging into low level Solana code.

To help teams get productive quickly, I also shipped a complete Solana Wallet Demo sample that showcases connection flows, UI prefab usage, and transaction helpers, plus extended documentation and evaluation notes inside the package. Together, this turns Solana integration from a weeks long research task into something that can be integrated, tested, and iterated on inside a single sprint, while keeping the whole stack open and maintainable under the NanoRes GitHub organization.

Scope of Work:

- Designed the overall architecture for the NanoRes Solana Toolbelt Unity package, including runtime, editor, and sample layout.

- Wrapped and curated the Solana Unity SDK into a cohesive set of C# services, wallet adapters, and OGAL integration utilities.

- Implemented editor tools and setup wizards to configure wallets, RPC endpoints, and package dependencies inside Unity.

- Built a Solana Wallet Demo scene that demonstrates real wallet connection, UI prefabs, and transaction flows end to end.

- Authored package documentation, installation guides, and QA notes to support adoption by external teams as a public good SDK.

NanoRes

Digital Collectible

Launchpad

I led the design and development of the NanoRes Digital Collectible Mint Launchpad, a first-of-its-kind WebGL experience built in Unity on Solana. Instead of a static mint page, it gives collectors a playful, interactive way to connect their wallets, explore the collection, and mint in real time, all framed as a branded, mini-game-like experience that teams can ship directly in the browser.

Behind the scenes, the Launchpad plugs into our in-house DC3 pipeline and Solana Toolbelt for Unity so collections can be configured without writing code. Teams define traits, rarity, and metadata, set their mint phases and pricing, then the Launchpad handles wallet connection, on-chain minting, live supply updates, and error handling. The front end is fully skinnable so partners can reskin the experience to their own IP while we keep the underlying mint logic, performance tuning, and blockchain integration consistent and reliable.

Scope of Work:

- Product and UX design for an interactive, game-like NFT mint experience in WebGL.

- Unity WebGL client implementation with wallet connect, mint flow, and real-time transaction feedback.

- Integration with the NanoRes DC3 pipeline for asset layers, rarity configuration, and metadata generation.

- Solana integration using the Solana Toolbelt for Unity to simplify wallet, mint, and read operations.

Theming system that lets partner teams rebrand the Launchpad without touching core code.

- Performance and build pipeline optimization for smooth WebGL delivery across desktop and mobile browsers.

Mixed Reality Products

TopGolf AR Bay Lens

I architected and led development of Topgolf’s AR Bay Lens Unity port, a computer vision powered augmented reality experience that lets guests point their iOS or Android device at the hitting bay and see rich game visuals and overlays anchored directly to the real world. Built in Unity using AR Foundation and Unity Sentis, the system recognizes the physical bay layout in the camera feed, locks a stable coordinate system to key features, and then renders responsive UI, effects, and game feedback right on top of the mat, ball dispenser, and surrounding environment. The result turns a standard bay into an interactive AR canvas that enhances games without requiring any new hardware in the venue.

On the technical side, I designed the end to end pipeline for on device computer vision and AR alignment. Unity Sentis runs lightweight machine learning models locally to identify bay elements and estimate pose, while AR Foundation handles tracking, plane detection, and platform specific AR details for both iOS and Android. I focused heavily on calibration, performance, and robustness so the experience remained stable across different venues, lighting conditions, and device classes, all while keeping frame rates high enough for a smooth, production ready guest experience.

Scope of Work:

- Architected the AR Bay Lens prototype that augments real Topgolf bays using mobile devices.

Implemented computer vision workflows with Unity Sentis to detect bay geometry and key visual features in the camera feed.

- Used AR Foundation to build a cross platform AR layer that aligned virtual content to the physical bay on both iOS and Android.

- Designed and implemented the Unity client UI, interaction patterns, and calibration flows for a quick, guest friendly setup.

- Optimized rendering, model execution, and tracking to maintain high frame rates on a wide range of mobile hardware.

- Collaborated with product, design, and on site teams to iterate from prototype to venue ready experience that could plug into Topgolf’s broader digital ecosystem.

DimeTime:

NFL VR Training

At Radd3 I worked on a suite of HTC Vive training products that gave professional athletes a way to take high quality mental reps in Virtual Reality without adding physical wear and tear. The flagship experience was an NFL training tool where coaches could import offensive and defensive plays, assign matchups, and drop players into fully immersive game situations with crowd noise, pressure, and distractions. Players ran through their reads and responsibilities from a first person view, while coaches collected detailed session data that would be impossible to capture in a standard practice.

I contributed heavily to both the core VR experience and the Coaches Companion application that ran on Windows Surface tablets. On the VR side I implemented the UI systems that players used to navigate sessions and drills, built the audio system that handled crowd, coaching, and on field soundscapes, and created the wide receiver animation blending so catches could happen at variable, local positions instead of fixed keyframes. For the Coaches Companion, I implemented a responsive UI that made it easy to configure plays, schedule sessions, and review metrics from the sideline or meeting room, and I wired the client into the backend services using UnityWebRequest for authentication and data exchange.

During my time at Radd3 I also solo developed Matt Williams’ VR Batting Practice, another HTC Vive experience that placed players at home plate in a major league stadium. The simulator supported multiple pitch types like sliders, curveballs, sinkers, knuckleballs, change ups, and fastballs, and at the end of each session it presented a clear, visual breakdown of contact quality and outcomes to help players refine their swing. Both products combined realism, feedback, and repetition to give pros meaningful practice time in VR.

Scope of Work:

- Developed an HTC Vive NFL training platform that let players take immersive mental reps on imported offensive and defensive plays.

- Built VR UI systems for session flow, play selection, and in experience interaction.

Implemented the audio system for crowd, on field, and coaching feedback to simulate game day pressure.

- Created wide receiver animation blending so catch animations could adjust to different ball locations around the player.

- Developed the Coaches Companion application targeting Windows Surface tablets with a responsive, touch friendly UI.

- Integrated client to backend communication using UnityWebRequest for authentication, session data, and analytics.

- Solo designed and developed Matt Williams’ VR Batting Practice for HTC Vive, including pitch logic, hitting feedback, and post session performance UI.

VR Disasterville:

Army National Guard

Meta Quest 3 colocation system that lets a team of players share the same physical space and see the same virtual world perfectly aligned around them. The experience was built as a mixed reality activation for the Army National Guard, transforming ordinary high school gyms into walkable disaster zones where squads of students fight floods, wildfires, and earthquake aftermath together. Using inside-out tracking, hand tracking, and physical props mapped one to one into the virtual scene, players can grab oars, hoses, and rescue tools with their real hands and feel fully grounded in the mission.

On the technical side, I designed the colocation and networking architecture so that up to five Quest 3 headsets share a single coordinate system, even on an offline local network. Each deployment starts with a fast calibration pass that locks the virtual environment to the real gym layout, then distributes that reference space to every headset so avatars, props, and obstacles line up within a tight error margin. I used Unity, Photon Fusion, and the Meta SDK to synchronize player positions, interactions, and mission state, while a separate operator interface handles session control, safety boundaries, and quick resets between groups.

Scope of Work:

- Architected the Quest 3 colocation pipeline that aligns multiple headsets to a shared physical space.

- Implemented offline, LAN based multiplayer using Photon Fusion with deterministic session control.

- Integrated Meta hand tracking and physical props so real world tools match their virtual counterparts.

- Built calibration tools to bind virtual disaster environments to arbitrary gym layouts in minutes.

Roche Biomedical VR Training

I architected and led development of an enterprise VR training platform for Roche, delivering an immersive way for scientists and technicians to learn complex lab procedures without flying to training centers or shipping expensive equipment. Built in Unity, the system lets trainees interact with accurate virtual replicas of Roche hardware and workflows, practice step by step in a safe environment, and receive real-time feedback on their performance before ever touching real lab equipment.

To support Roche and other clients like VR Learning Time, I designed a modular lesson framework built around ScriptableObjects so that content creators and subject matter experts could author and update training scenarios without changing code. Each lesson is defined by data that is injected into a shared set of core systems for interaction, progression, assessment, and analytics. When we add a new feature for one lesson, it becomes instantly available to every existing and future lesson, which dramatically reduces development overhead and keeps behavior consistent across the platform.

I also led the engineering team using Agile methodology, running sprint planning, backlog grooming, code reviews, and regular releases to keep quality high and delivery predictable. This combination of reusable systems, data-driven design, and disciplined process allowed us to roll out new enterprise-grade VR training modules quickly while maintaining a robust, scalable codebase.

Scope of Work:

- Architected an enterprise VR training platform for Roche using Unity.

- Designed a reusable, ScriptableObject-driven lesson framework for multiple clients and training scenarios.

- Implemented core systems for interaction, step-by-step progression, assessments, and analytics.

- Enabled non-programmers to build and update lesson content safely through data-driven tools and workflows.

- Led the engineering team with Agile practices (sprint planning, backlog management, code reviews, and releases).

- Optimized for maintainability and scalability so new features automatically extended to all existing lesson plans.

360° VR-Optional

Live Broadcasting

I helped build Immersive Media’s 360 degree, VR optional live broadcasting platform that was used by major broadcasters during the Rio 2016 Olympics, letting viewers in the US and UK experience events as fully navigable 360 video and VR instead of traditional flat feeds. This work took me from casual game development into true enterprise grade software, powering a global audience for one of the first large scale Olympic VR and 360 broadcast offerings.

My primary responsibility was the application layer. I designed and implemented the UI that viewers used to navigate live feeds and camera angles, as well as the internal control interfaces that the broadcast team used to decide what viewers would see and when. On the rendering side, I wrote a custom shader that allowed certain input feeds to render with transparency, and added cropping controls so production staff could frame and composite multiple 360 and flat video windows in real time inside the broadcasting tool. All of this had to run reliably on tight broadcast timelines, sync with live video pipelines, and stay flexible enough for evolving production needs, right up through the Olympics and the eventual acquisition of Immersive Media by Digital Domain in 2016.

Scope of Work:

- Contributed to a 360 degree, VR optional live broadcasting platform used for Rio 2016 Olympic coverage.

- Built viewer facing UI for navigating live 360 streams, camera angles, and viewing modes.

- Built internal control room UI for producers to control which feeds, angles, and overlays were sent to viewers in real time.

- Implemented custom shaders to support transparency and compositing of multiple live input feeds.

- Added cropping and layout tools so operators could adjust playback windows dynamically during broadcasts.

- Collaborated with the broader engineering and production teams to ensure the system met broadcast reliability standards for a global, high stakes live event.

Hologress' AR Exercise Trainer

I architected and developed the core systems for Hologress, an Augmented Reality fitness application that lets users drop 3D trainers into their physical space and learn how to perform exercises correctly. Using mobile AR, the app places virtual characters at real world scale, then steps users through proper form, timing, and sequencing so each exercise feels like having a coach in the room instead of watching a flat video.

To keep the product scalable, I designed a fully data driven exercise framework built around ScriptableObjects in Unity. Every exercise is defined as data only, including labels, instructions, character animation prefabs, audio, categories, and progression settings. A designer can duplicate an existing exercise asset, fill in the new fields, and assign it to a category without touching code. The responsive UI system discovers these data assets at runtime, automatically generates the correct menus and buttons, and wires them into the flow so new content is instantly available. In practice, this meant the team could keep expanding the exercise library indefinitely while my code remained stable, which is exactly the outcome I was aiming for when I set out to “build myself out of the contract.”

Scope of Work:

- Architected the core AR exercise experience in Unity, with virtual trainers teaching movements in the user’s real space.

- Designed a ScriptableObject based content pipeline so new exercises can be added entirely through data.

- Implemented a responsive UI system that auto detects new exercise assets and generates the correct navigation elements at runtime.

- Built reusable interaction and animation controllers shared across all exercises to keep behavior consistent and maintainable.

- Optimized the architecture so designers and non programmers could keep expanding the exercise library without requiring engineering changes.

Dormakaba Interactive Exhibit

I designed and developed the Dormakaba Interactive Exhibit, an augmented reality experience that let customers explore Dormakaba’s access solutions in a hands on, immersive way. Instead of relying on static brochures or hardware bolted to a wall, visitors could use a tablet to bring 3D product models to life, view them in context, and step through guided demos that explained how each solution worked in real environments like offices, hotels, and secure facilities.

Under the hood, the exhibit was built in Unity with a modular, data driven content pipeline so new products, scenes, and languages could be added without rewriting code. Product teams could update visuals and messaging while the core AR interaction, animation, and UI systems stayed the same. The result was a portable, reusable exhibit that Dormakaba could take to events, showrooms, and client demos, giving sales and marketing teams a consistent, memorable way to showcase complex hardware and systems.

Scope of Work:

- Architected and implemented a Unity based AR exhibit for Dormakaba product demonstrations.

Built interactive 3D product visualizations with hotspots, animations, and step by step narratives.

- Designed a data driven content system so new products and configurations could be added without code changes.

- Created an intuitive UX for both guided sales presentations and self directed exploration.

- Optimized performance for mobile devices handling detailed 3D models in event environments.

- Collaborated with Dormakaba stakeholders to translate product features into clear, interactive stories suitable for trade shows and customer demos.

disruptED

I developed disruptED as a cross platform educational experience that blends Augmented Reality and Virtual Reality to help Pre K through 3rd grade students learn in a more engaging, joyful way. Built in Unity at a time when AR and VR support was still maturing, the app lets kids move seamlessly between reading physical books enhanced by AR and stepping into fully immersive VR scenes that bring characters, environments, and concepts to life.

From a technical standpoint, I designed the application as a single codebase that could target both mobile AR and mobile driven VR, with responsive UI flows, shared interaction patterns, and reusable content pipelines. This included implementing character animations, interactive hotspots, and kid friendly controls that worked across modes while keeping performance solid on commodity devices. The result gave educators and parents a tool that transforms traditional reading time into interactive learning, which contributed to disruptED being recognized as both a winner and a finalist in the EdTech Cool Tool Awards for Best VR and AR Solution.

Scope of Work:

- Developed a Unity based AR and VR learning app focused on early elementary students.

- Implemented cross platform UX and UI systems that support both handheld AR and mobile powered VR from a single codebase.

- Created animations, interactions, and mini experiences that align with curriculum goals while staying intuitive for young children.

- Optimized content and rendering so the experience runs smoothly on typical smartphones and low cost VR viewers.

- Collaborated with the product team to translate educational objectives into playful, immersive scenes that keep kids engaged and support better retention.

Rescan 360

I implemented the AR and VR viewing modes for Rescan 360’s commercial real estate platform, giving clients a radically new way to explore large properties digitally. Using Unity, I built an experience where users could step into fully reconstructed buildings, either on a mobile device in AR or in an immersive VR session, and move through hallways, rooms, and key areas as if they were physically on site. Instead of static photos or traditional 360 tours, they could navigate rich 3D spaces that came directly from Rescan 360’s head-worn scanning system, which captured the geometry of each building as technicians walked through it.

My work focused on the client side of that pipeline. I integrated the scanned environment data into a flexible Unity scene loader that respected user permissions, so different roles only saw the spaces and tools they were authorized for. On top of that, I designed and implemented a mobile responsive UI that worked cleanly on both Android and iOS, with controls tuned for handheld AR walkthroughs as well as VR-style navigation and inspection. The result was a unified viewing application that showcased the full power of Rescan 360’s hardware and data pipeline and contributed to the hardware-and-software solution receiving a Red Dot design award in the “Best of the Best” category in 2021.

Scope of Work:

- Implemented AR and VR viewing options in Unity so users could explore commercial real estate scans on mobile and in immersive modes.

- Built a mobile responsive UI system for Android and iOS, including navigation, inspection, and mode switching.

- Integrated head-mounted scanner output into the client app, loading building geometry based on user permissions and role.

- Created flexible scene loading and viewing flows so new scans and sites could be added without major code changes.

- Collaborated with the engineering team to optimize performance and UX across devices and refine the experience into a polished client-ready tool.

Games

VR Disasterville:

Army National Guard

Meta Quest 3 colocation system that lets a team of players share the same physical space and see the same virtual world perfectly aligned around them. The experience was built as a mixed reality activation for the Army National Guard, transforming ordinary high school gyms into walkable disaster zones where squads of students fight floods, wildfires, and earthquake aftermath together. Using inside-out tracking, hand tracking, and physical props mapped one to one into the virtual scene, players can grab oars, hoses, and rescue tools with their real hands and feel fully grounded in the mission.

On the technical side, I designed the colocation and networking architecture so that up to five Quest 3 headsets share a single coordinate system, even on an offline local network. Each deployment starts with a fast calibration pass that locks the virtual environment to the real gym layout, then distributes that reference space to every headset so avatars, props, and obstacles line up within a tight error margin. I used Unity, Photon Fusion, and the Meta SDK to synchronize player positions, interactions, and mission state, while a separate operator interface handles session control, safety boundaries, and quick resets between groups.

Scope of Work:

- Architected the Quest 3 colocation pipeline that aligns multiple headsets to a shared physical space.

- Implemented offline, LAN based multiplayer using Photon Fusion with deterministic session control.

- Integrated Meta hand tracking and physical props so real world tools match their virtual counterparts.

- Built calibration tools to bind virtual disaster environments to arbitrary gym layouts in minutes.

Skewerz:

An Annoying Orange Game

I designed and developed Skewerz, a free to play mobile game for the creator of Annoying Orange, built specifically to fit his brand’s fast, chaotic humor. After prototyping several gameplay concepts, I worked with him to select a Snake inspired design that felt both instantly familiar and on brand. The final game puts players in control of a growing food skewer, dodging hazards, collecting items, and triggering over the top reactions from the characters, all wrapped in the Annoying Orange visual and audio style for iOS and Android.

On top of the core design and implementation, I collaborated with one junior developer to plan and ship the full monetization strategy, including in app purchases and ad driven engagement loops. We intentionally tuned the game to be more challenging than a typical casual title to match the expectations of his audience, knowing that this would trade off some retention and revenue for a difficulty curve the creator was proud of. From a production standpoint, I handled everything from gameplay systems and level progression to UI, feel, and performance, delivering a branded, polished experience that aligned with the creator’s goals.

Scope of Work:

- Prototyped multiple concepts for the Annoying Orange brand and led the selection of a Snake inspired core gameplay loop.

- Designed and implemented the full game in Unity for both Android and iOS, including controls, scoring, and progression.

- Translated the Annoying Orange humor and style into in game visuals, animations, and audio reactions.

- Designed and implemented the monetization model, including in app purchases and ad integration.

- Tuned difficulty and game balance to create a deliberately challenging experience for dedicated fans.

- Delivered a complete, branded mobile title from concept through launch as an external contractor.

Gameshow

At Misfits Gaming Group I led a UGC focused Roblox development team that helped create Gameshow, a competitive party game built under the Pixel Playground studio with Karl Jacobs and KreekCraft. Gameshow drops players into a chaotic TV show where teams dodge lasers, dynamite, and traps to earn points, unlock cosmetics from a rotating shop, and climb a global superstar ranking across 16 player lobbies. Shortly after launch, the game passed 3 million visits, with peak concurrent players in the tens of thousands, and became the flagship Roblox experience tied to the Pixel Playground brand and live tour.

On the game side, I focused on architecting a modular, live service friendly framework so we could ship new maps, obstacles, and modes quickly without breaking existing content. Core systems like round flow, team scoring, difficulty scaling, and hazard behavior were all driven by configuration data, which let designers and scripters build and iterate on minigames without touching low level infrastructure. We layered this with progression systems such as a free battle pass, session based rewards, and event badges so that live operations and marketing had clear levers to pull for new seasons and collaborations.

Scope of Work:

- Led a UGC Roblox development team at Misfits Gaming Group on Pixel Playground’s flagship game, Gameshow.

- Designed the core game loop for team based, competitive obstacle course style rounds with scalable difficulty.

- Architected modular systems for rounds, scoring, hazards, and maps so new minigames could be added through configuration rather than bespoke code.

- Implemented progression features including a free battle pass, daily shop integrations, and event driven rewards.

- Integrated analytics and tuning hooks to support live events, marketing beats, and future content updates.

- Ran Agile processes for the dev team, including sprint planning, backlog management, and code reviews, ensuring stable releases in support of tours, streams, and creator content.

disruptED

I developed disruptED as a cross platform educational experience that blends Augmented Reality and Virtual Reality to help Pre K through 3rd grade students learn in a more engaging, joyful way. Built in Unity at a time when AR and VR support was still maturing, the app lets kids move seamlessly between reading physical books enhanced by AR and stepping into fully immersive VR scenes that bring characters, environments, and concepts to life.

From a technical standpoint, I designed the application as a single codebase that could target both mobile AR and mobile driven VR, with responsive UI flows, shared interaction patterns, and reusable content pipelines. This included implementing character animations, interactive hotspots, and kid friendly controls that worked across modes while keeping performance solid on commodity devices. The result gave educators and parents a tool that transforms traditional reading time into interactive learning, which contributed to disruptED being recognized as both a winner and a finalist in the EdTech Cool Tool Awards for Best VR and AR Solution.

Scope of Work:

- Developed a Unity based AR and VR learning app focused on early elementary students.

- Implemented cross platform UX and UI systems that support both handheld AR and mobile powered VR from a single codebase.

- Created animations, interactions, and mini experiences that align with curriculum goals while staying intuitive for young children.

- Optimized content and rendering so the experience runs smoothly on typical smartphones and low cost VR viewers.

- Collaborated with the product team to translate educational objectives into playful, immersive scenes that keep kids engaged and support better retention.

Token Toss

I solo-built Token Toss end-to-end: a UGC mobile game on Solana powered by the OGAL Protocol (custom Solana program) and the Solana Toolbelt for Unity (built on top of the Solana Unity SDK). The game introduces a new primitive for games (a global, open UGC asset layer), where creators verifiably own their assets (by wallet) and can update them, while anyone can freely read and use those assets across experiences.

In Token Toss, players design levels in-game and publish them on-chain via OGAL; the creator pays only at publish time. Any player can then load that level by its mint address with zero additional blockchain transactions. This removes platform lock-in, preserves asset utility even if a single title sunsets, and lets creators keep more of what they earn versus 30%–75% platform cuts.

Scope of Work:

- Product, client, and on-chain architecture from concept to alpha.

- Unity client with in-game level editor and mint flow.

- OGAL Solana program for ownership, update authority, and open reads.

- Solana Toolbelt for Unity to make wallet, mint, and read operations seamless.

Review the git repo at https://github.com/Ghiblify-Games/OGAL-And-Token-Toss

Download the free Android game at https://www.ghiblifygames.com

Let's Go Dig!

I designed and developed Let’s Go Dig!, an innovative cross platform mobile game that mixed casual digging gameplay with social and real world rewards. It was my first full stack project, and I used Parse to build a backend that handled user accounts, persistent progress, and a set of social systems that made the world feel shared and playful. Players could create an account, log in from different devices, and find treasure chests that contained actual redeemable prizes. Those prizes could be claimed or reburied into a friend’s game, which turned simple loot into a social gesture between players instead of a purely single player reward loop.

On top of the treasure system, I implemented a message in a bottle mechanic where players could bury notes for their friends to discover while digging. Finding and reading those messages added a personal, almost pen pal layer to the experience. Another standout feature allowed players to swap the destructible ground texture with a photo from their camera or gallery, so they could literally watch their character dig through an image that mattered to them. Working with a single artist and a limited budget, I focused on making each interaction visually satisfying and cohesive, using smart reuse of assets and effects so the game still felt polished and expressive.

Scope of Work:

- Designed and implemented core digging gameplay and progression for a cross platform mobile title.

- Built a Parse based backend for account creation, login, and persistent game data.

- Created a treasure chest system with real prize rewards that could be claimed or reburied into friends’ games.

- Implemented a message in a bottle feature so players could bury and discover personal messages in each other’s worlds.

- Added a custom ground system that used photos from the device camera or gallery as the destructible terrain.

- Collaborated with an artist to deliver engaging visuals and UI while working within tight budget constraints.

- Handled full stack responsibilities, from backend data models and APIs to client side gameplay, UI, and polish.

Mobile Action RPG:

Web3 Economy

w

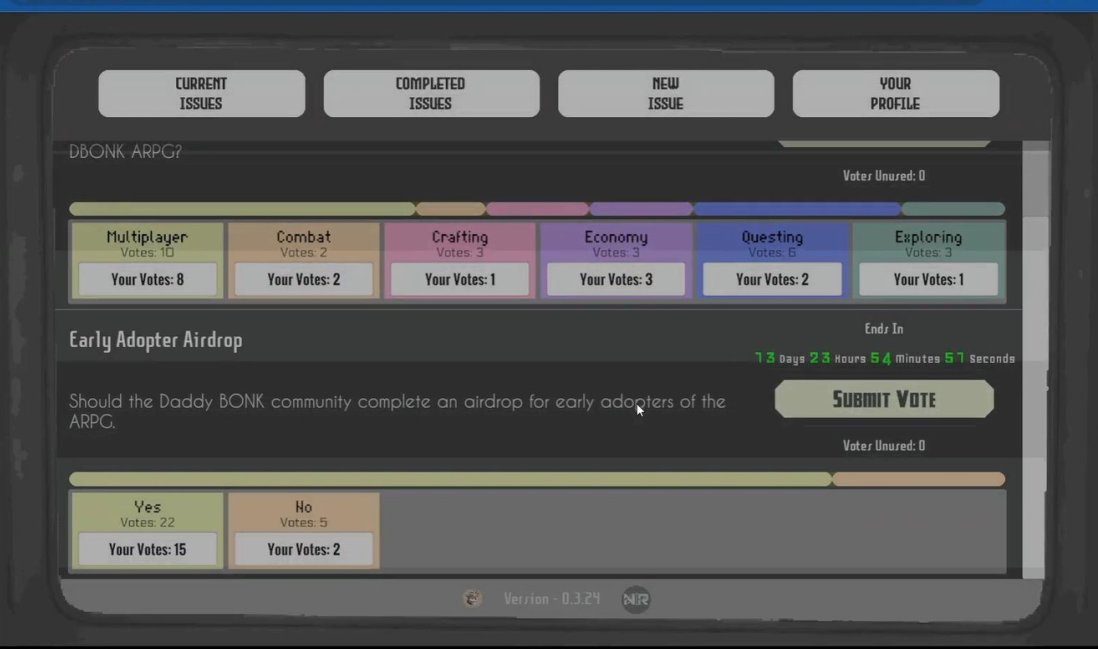

I designed and developed a mobile friendly 3D action RPG prototype for NanoRes Studios that gives Solana based Daddy BONK digital collectibles real in game utility. The prototype serves as the foundation for a larger ARPG experience, where holders can bring their on chain assets into a persistent world, explore, fight, trade, and progress through story content that reflects the Daddy BONK community and lore. My focus was to prove out the core gameplay loop, integrate all the essential RPG systems, and architect the world in a way that would scale to a full production title targeting mobile hardware.

Under the hood, I implemented a cell based world architecture that uses spatial partitioning and distant cell rendering so large environments can stream efficiently on phones and tablets. On top of that, I built out combat systems for melee and ranged attacks, a branching dialogue system, quest and faction systems, a trading and looting pipeline (containers, corpses, and world pickups), dynamic weather, and a full day and night cycle that influences ambience and gameplay. The prototype also includes character creation with a custom face builder, consumables with configurable effects, NPC AI driven by behavior trees, and unified UI flows that tie all of these systems together. Taken as a whole, it is a vertical slice of the ARPG framework that NanoRes can now evolve into a full Solana powered game.

Scope of Work:

- Designed and implemented a mobile friendly 3D ARPG prototype that integrates Solana based Daddy BONK digital assets.

- Built a cell based world system with spatial partitioning and distant cell rendering for scalable open environments.

- Implemented core combat systems for melee and ranged attacks, including hit detection, damage, and feedback.

- Created a branching dialogue system, quest system, and faction system to support narrative and reputation driven gameplay.

- Developed trading and looting systems for containers, corpses, and world items, with inventory and item data structures.

- Added dynamic weather and day and night cycles that drive lighting, ambience, and potential gameplay hooks.

- Implemented character creation with a custom face builder and configurable player stats.

- Built NPC AI using behavior trees for patrol, combat, and interaction logic.

- Implemented consumables and item effects, crafting systems, and UI for managing all game systems in a cohesive way.

AirTime HD

At Radd3 I worked on a suite of HTC Vive training products that gave professional athletes a way to take high quality mental reps in Virtual Reality without adding physical wear and tear. The flagship experience was an NFL training tool where coaches could import offensive and defensive plays, assign matchups, and drop players into fully immersive game situations with crowd noise, pressure, and distractions. Players ran through their reads and responsibilities from a first person view, while coaches collected detailed session data that would be impossible to capture in a standard practice.

I contributed heavily to both the core VR experience and the Coaches Companion application that ran on Windows Surface tablets. On the VR side I implemented the UI systems that players used to navigate sessions and drills, built the audio system that handled crowd, coaching, and on field soundscapes, and created the wide receiver animation blending so catches could happen at variable, local positions instead of fixed keyframes. For the Coaches Companion, I implemented a responsive UI that made it easy to configure plays, schedule sessions, and review metrics from the sideline or meeting room, and I wired the client into the backend services using UnityWebRequest for authentication and data exchange.

During my time at Radd3 I also solo developed Matt Williams’ VR Batting Practice, another HTC Vive experience that placed players at home plate in a major league stadium. The simulator supported multiple pitch types like sliders, curveballs, sinkers, knuckleballs, change ups, and fastballs, and at the end of each session it presented a clear, visual breakdown of contact quality and outcomes to help players refine their swing. Both products combined realism, feedback, and repetition to give pros meaningful practice time in VR.

Scope of Work:

- Developed an HTC Vive NFL training platform that let players take immersive mental reps on imported offensive and defensive plays.

- Built VR UI systems for session flow, play selection, and in experience interaction.

Implemented the audio system for crowd, on field, and coaching feedback to simulate game day pressure.

- Created wide receiver animation blending so catch animations could adjust to different ball locations around the player.

- Developed the Coaches Companion application targeting Windows Surface tablets with a responsive, touch friendly UI.

- Integrated client to backend communication using UnityWebRequest for authentication, session data, and analytics.

After completing this project for Radd3, I went on to designed and developed Matt Williams’ VR Batting Practice for HTC Vive, including pitch logic, hitting feedback, and post session performance UI.

Mobile Products

Rescan 360

I implemented the AR and VR viewing modes for Rescan 360’s commercial real estate platform, giving clients a radically new way to explore large properties digitally. Using Unity, I built an experience where users could step into fully reconstructed buildings, either on a mobile device in AR or in an immersive VR session, and move through hallways, rooms, and key areas as if they were physically on site. Instead of static photos or traditional 360 tours, they could navigate rich 3D spaces that came directly from Rescan 360’s head-worn scanning system, which captured the geometry of each building as technicians walked through it.

My work focused on the client side of that pipeline. I integrated the scanned environment data into a flexible Unity scene loader that respected user permissions, so different roles only saw the spaces and tools they were authorized for. On top of that, I designed and implemented a mobile responsive UI that worked cleanly on both Android and iOS, with controls tuned for handheld AR walkthroughs as well as VR-style navigation and inspection. The result was a unified viewing application that showcased the full power of Rescan 360’s hardware and data pipeline and contributed to the hardware-and-software solution receiving a Red Dot design award in the “Best of the Best” category in 2021.

Scope of Work:

- Implemented AR and VR viewing options in Unity so users could explore commercial real estate scans on mobile and in immersive modes.

- Built a mobile responsive UI system for Android and iOS, including navigation, inspection, and mode switching.

- Integrated head-mounted scanner output into the client app, loading building geometry based on user permissions and role.

- Created flexible scene loading and viewing flows so new scans and sites could be added without major code changes.

- Collaborated with the engineering team to optimize performance and UX across devices and refine the experience into a polished client-ready tool.

DraftCard

— DRAFTCARD (@DraftCardApp) January 15, 2020

I led Unity development for DraftCard, a mobile app that helps student athletes build real time profiles of their athletic careers and get discovered by college scouts. Players can upload highlights, stats, and photos into a living profile that showcases their growth over time, making it much easier for coaches to evaluate talent beyond a single game or tournament. As someone who played multiple sports growing up, this project was especially meaningful for me, since the product directly supports the kind of opportunities I would have wanted as a high school athlete.

When I joined, my first responsibility was to stabilize the existing codebase. I worked with a small Unity team to pay down the technical debt left by a previous vendor, refactor core systems, and establish cleaner patterns for navigation, UI, and data flow. Once the foundation was solid, we expanded the app with a more responsive, mobile first UI, cross platform media capture and upload (camera and gallery), and push notification support so athletes and coaches could stay engaged. I also designed and implemented a client side framework for securely calling the backend, handling authentication, permissions, and data synchronization, coordinating closely with a backend team in another time zone to make sure the end to end experience was reliable and secure.

Scope of Work:

- Led a small Unity development team on DraftCard, a mobile app for student athletes to showcase their talent to college scouts.

- Refactored and stabilized a legacy codebase, resolving technical debt and improving maintainability and performance.

- Designed and implemented a more responsive, mobile focused UI and navigation flow.

- Built cross platform photo capture and upload features for iOS and Android, integrated with athlete profiles.

- Added push notification support to keep athletes and coaches informed about activity and opportunities.

- Designed a secure client side framework for backend API calls, including authentication, permissions, and data syncing.

- Collaborated with a remote backend team to integrate the app with existing services and ensure end to end reliability.

Skewerz:

An Annoying Orange Game

I designed and developed Skewerz, a free to play mobile game for the creator of Annoying Orange, built specifically to fit his brand’s fast, chaotic humor. After prototyping several gameplay concepts, I worked with him to select a Snake inspired design that felt both instantly familiar and on brand. The final game puts players in control of a growing food skewer, dodging hazards, collecting items, and triggering over the top reactions from the characters, all wrapped in the Annoying Orange visual and audio style for iOS and Android.

On top of the core design and implementation, I collaborated with one junior developer to plan and ship the full monetization strategy, including in app purchases and ad driven engagement loops. We intentionally tuned the game to be more challenging than a typical casual title to match the expectations of his audience, knowing that this would trade off some retention and revenue for a difficulty curve the creator was proud of. From a production standpoint, I handled everything from gameplay systems and level progression to UI, feel, and performance, delivering a branded, polished experience that aligned with the creator’s goals.

Scope of Work:

- Prototyped multiple concepts for the Annoying Orange brand and led the selection of a Snake inspired core gameplay loop.

- Designed and implemented the full game in Unity for both Android and iOS, including controls, scoring, and progression.

- Translated the Annoying Orange humor and style into in game visuals, animations, and audio reactions.

- Designed and implemented the monetization model, including in app purchases and ad integration.

- Tuned difficulty and game balance to create a deliberately challenging experience for dedicated fans.

- Delivered a complete, branded mobile title from concept through launch as an external contractor.

Token Toss

I solo-built Token Toss end-to-end: a UGC mobile game on Solana powered by the OGAL Protocol (custom Solana program) and the Solana Toolbelt for Unity (built on top of the Solana Unity SDK). The game introduces a new primitive for games (a global, open UGC asset layer), where creators verifiably own their assets (by wallet) and can update them, while anyone can freely read and use those assets across experiences.

In Token Toss, players design levels in-game and publish them on-chain via OGAL; the creator pays only at publish time. Any player can then load that level by its mint address with zero additional blockchain transactions. This removes platform lock-in, preserves asset utility even if a single title sunsets, and lets creators keep more of what they earn versus 30%–75% platform cuts.

Scope of Work:

- Product, client, and on-chain architecture from concept to alpha.

- Unity client with in-game level editor and mint flow.

- OGAL Solana program for ownership, update authority, and open reads.

- Solana Toolbelt for Unity to make wallet, mint, and read operations seamless.

Review the git repo at https://github.com/Ghiblify-Games/OGAL-And-Token-Toss

Download the free Android game at https://www.ghiblifygames.com

Gameshow

At Misfits Gaming Group I led a UGC focused Roblox development team that helped create Gameshow, a competitive party game built under the Pixel Playground studio with Karl Jacobs and KreekCraft. Gameshow drops players into a chaotic TV show where teams dodge lasers, dynamite, and traps to earn points, unlock cosmetics from a rotating shop, and climb a global superstar ranking across 16 player lobbies. Shortly after launch, the game passed 3 million visits, with peak concurrent players in the tens of thousands, and became the flagship Roblox experience tied to the Pixel Playground brand and live tour.

On the game side, I focused on architecting a modular, live service friendly framework so we could ship new maps, obstacles, and modes quickly without breaking existing content. Core systems like round flow, team scoring, difficulty scaling, and hazard behavior were all driven by configuration data, which let designers and scripters build and iterate on minigames without touching low level infrastructure. We layered this with progression systems such as a free battle pass, session based rewards, and event badges so that live operations and marketing had clear levers to pull for new seasons and collaborations.

Scope of Work:

- Led a UGC Roblox development team at Misfits Gaming Group on Pixel Playground’s flagship game, Gameshow.

- Designed the core game loop for team based, competitive obstacle course style rounds with scalable difficulty.

- Architected modular systems for rounds, scoring, hazards, and maps so new minigames could be added through configuration rather than bespoke code.

- Implemented progression features including a free battle pass, daily shop integrations, and event driven rewards.

- Integrated analytics and tuning hooks to support live events, marketing beats, and future content updates.

- Ran Agile processes for the dev team, including sprint planning, backlog management, and code reviews, ensuring stable releases in support of tours, streams, and creator content.

Luminant Music

At Cybernetic Entertainment I served as Lead Unity Developer on a suite of projects that ranged from virtual robots that could play music, draw art, and play board games, to Luminant Music, a cross platform music visualization app packed with creative features. Luminant Music shipped as an immersive visualizer that reacts in real time to a listener’s audio, turning living rooms and displays into dynamic music experiences. In parallel, we pushed forward on experimental virtual robot software that blended character animation, AI driven behaviors, and interactive entertainment, even though funding constraints ultimately prevented that work from going to market.

This was one of the largest engineering teams I have led, with close to thirty contributors spread across four active Unity projects. My job was to create shared architectural systems that could be reused across titles and to implement cost effective workflows that made multi project development sustainable. I defined the core project structure, modularized subsystems for input, UI, effects, and content management, and set up pipelines so new features could be developed once and integrated into multiple products with minimal overhead. Alongside our QA Lead, I implemented a Jenkins based continuous integration and delivery setup wired into our Git and GitKraken workflow, with Slack notifications for build status, which kept quality high and feedback loops tight even as the team grew. Mentoring that many engineers, from juniors to seasoned contributors, was a highlight of my time there and I came away with as much learning as I shared.

Scope of Work:

- Led Unity development across four concurrent projects, including the cross platform Luminant Music visualizer and multiple virtual robot experiences.

- Designed shared architectural systems and frameworks that could be reused across projects to reduce duplication and improve maintainability.

- Established multi project development workflows that supported rapid iteration while keeping repositories, branches, and releases organized.

- Implemented continuous integration and delivery using Jenkins integrated with Git and GitKraken, including automated build pipelines and Slack based notifications.

- Collaborated closely with QA leadership to formalize testing practices and ensure stable releases across platforms.

- Mentored and supported a team of nearly thirty contributors, helping engineers grow while aligning their work with studio level technical standards and goals.

Mobile Action RPG:

Web3 Economy

w

I designed and developed a mobile friendly 3D action RPG prototype for NanoRes Studios that gives Solana based Daddy BONK digital collectibles real in game utility. The prototype serves as the foundation for a larger ARPG experience, where holders can bring their on chain assets into a persistent world, explore, fight, trade, and progress through story content that reflects the Daddy BONK community and lore. My focus was to prove out the core gameplay loop, integrate all the essential RPG systems, and architect the world in a way that would scale to a full production title targeting mobile hardware.

Under the hood, I implemented a cell based world architecture that uses spatial partitioning and distant cell rendering so large environments can stream efficiently on phones and tablets. On top of that, I built out combat systems for melee and ranged attacks, a branching dialogue system, quest and faction systems, a trading and looting pipeline (containers, corpses, and world pickups), dynamic weather, and a full day and night cycle that influences ambience and gameplay. The prototype also includes character creation with a custom face builder, consumables with configurable effects, NPC AI driven by behavior trees, and unified UI flows that tie all of these systems together. Taken as a whole, it is a vertical slice of the ARPG framework that NanoRes can now evolve into a full Solana powered game.

Scope of Work:

- Designed and implemented a mobile friendly 3D ARPG prototype that integrates Solana based Daddy BONK digital assets.

- Built a cell based world system with spatial partitioning and distant cell rendering for scalable open environments.

- Implemented core combat systems for melee and ranged attacks, including hit detection, damage, and feedback.

- Created a branching dialogue system, quest system, and faction system to support narrative and reputation driven gameplay.

- Developed trading and looting systems for containers, corpses, and world items, with inventory and item data structures.

- Added dynamic weather and day and night cycles that drive lighting, ambience, and potential gameplay hooks.

- Implemented character creation with a custom face builder and configurable player stats.

- Built NPC AI using behavior trees for patrol, combat, and interaction logic.

- Implemented consumables and item effects, crafting systems, and UI for managing all game systems in a cohesive way.

Let's Go Dig!

I designed and developed Let’s Go Dig!, an innovative cross platform mobile game that mixed casual digging gameplay with social and real world rewards. It was my first full stack project, and I used Parse to build a backend that handled user accounts, persistent progress, and a set of social systems that made the world feel shared and playful. Players could create an account, log in from different devices, and find treasure chests that contained actual redeemable prizes. Those prizes could be claimed or reburied into a friend’s game, which turned simple loot into a social gesture between players instead of a purely single player reward loop.

On top of the treasure system, I implemented a message in a bottle mechanic where players could bury notes for their friends to discover while digging. Finding and reading those messages added a personal, almost pen pal layer to the experience. Another standout feature allowed players to swap the destructible ground texture with a photo from their camera or gallery, so they could literally watch their character dig through an image that mattered to them. Working with a single artist and a limited budget, I focused on making each interaction visually satisfying and cohesive, using smart reuse of assets and effects so the game still felt polished and expressive.

Scope of Work:

- Designed and implemented core digging gameplay and progression for a cross platform mobile title.

- Built a Parse based backend for account creation, login, and persistent game data.

- Created a treasure chest system with real prize rewards that could be claimed or reburied into friends’ games.

- Implemented a message in a bottle feature so players could bury and discover personal messages in each other’s worlds.

- Added a custom ground system that used photos from the device camera or gallery as the destructible terrain.

- Collaborated with an artist to deliver engaging visuals and UI while working within tight budget constraints.

- Handled full stack responsibilities, from backend data models and APIs to client side gameplay, UI, and polish.

AirTime HD

At Radd3 I worked on a suite of HTC Vive training products that gave professional athletes a way to take high quality mental reps in Virtual Reality without adding physical wear and tear. The flagship experience was an NFL training tool where coaches could import offensive and defensive plays, assign matchups, and drop players into fully immersive game situations with crowd noise, pressure, and distractions. Players ran through their reads and responsibilities from a first person view, while coaches collected detailed session data that would be impossible to capture in a standard practice.

I contributed heavily to both the core VR experience and the Coaches Companion application that ran on Windows Surface tablets. On the VR side I implemented the UI systems that players used to navigate sessions and drills, built the audio system that handled crowd, coaching, and on field soundscapes, and created the wide receiver animation blending so catches could happen at variable, local positions instead of fixed keyframes. For the Coaches Companion, I implemented a responsive UI that made it easy to configure plays, schedule sessions, and review metrics from the sideline or meeting room, and I wired the client into the backend services using UnityWebRequest for authentication and data exchange.

During my time at Radd3 I also solo developed Matt Williams’ VR Batting Practice, another HTC Vive experience that placed players at home plate in a major league stadium. The simulator supported multiple pitch types like sliders, curveballs, sinkers, knuckleballs, change ups, and fastballs, and at the end of each session it presented a clear, visual breakdown of contact quality and outcomes to help players refine their swing. Both products combined realism, feedback, and repetition to give pros meaningful practice time in VR.

Scope of Work:

- Developed an HTC Vive NFL training platform that let players take immersive mental reps on imported offensive and defensive plays.

- Built VR UI systems for session flow, play selection, and in experience interaction.

Implemented the audio system for crowd, on field, and coaching feedback to simulate game day pressure.

- Created wide receiver animation blending so catch animations could adjust to different ball locations around the player.

- Developed the Coaches Companion application targeting Windows Surface tablets with a responsive, touch friendly UI.

- Integrated client to backend communication using UnityWebRequest for authentication, session data, and analytics.

After completing this project for Radd3, I went on to designed and developed Matt Williams’ VR Batting Practice for HTC Vive, including pitch logic, hitting feedback, and post session performance UI.

WebGL Applications

NanoRes

Digital Collectible

Launchpad

I led the design and development of the NanoRes Digital Collectible Mint Launchpad, a first-of-its-kind WebGL experience built in Unity on Solana. Instead of a static mint page, it gives collectors a playful, interactive way to connect their wallets, explore the collection, and mint in real time, all framed as a branded, mini-game-like experience that teams can ship directly in the browser.